To be at least somewhat fair to Google, their push to develop new technology to “measure skin tone” also measures how brown or Black you are. And at least on the surface, they’re not talking about a system to provide digital enhancements to Critical Race Theory programs. (Or at least… not yet.) The new project, as described to Reuters, is purportedly trying to improve facial recognition software and determine if many of the programs available on the market today are “biased” against persons of color. If you find yourself thinking that a software program is incapable of secretly harboring a racist agenda, I would definitely agree with you. But that doesn’t mean that the algorithms can’t be seriously flawed and produce spectacularly incorrect results when broken down along racial lines. Assuming they manage to pull this off, however, how long do you think it will be before this new technology is unleashed in the field of “racial justice” applications?

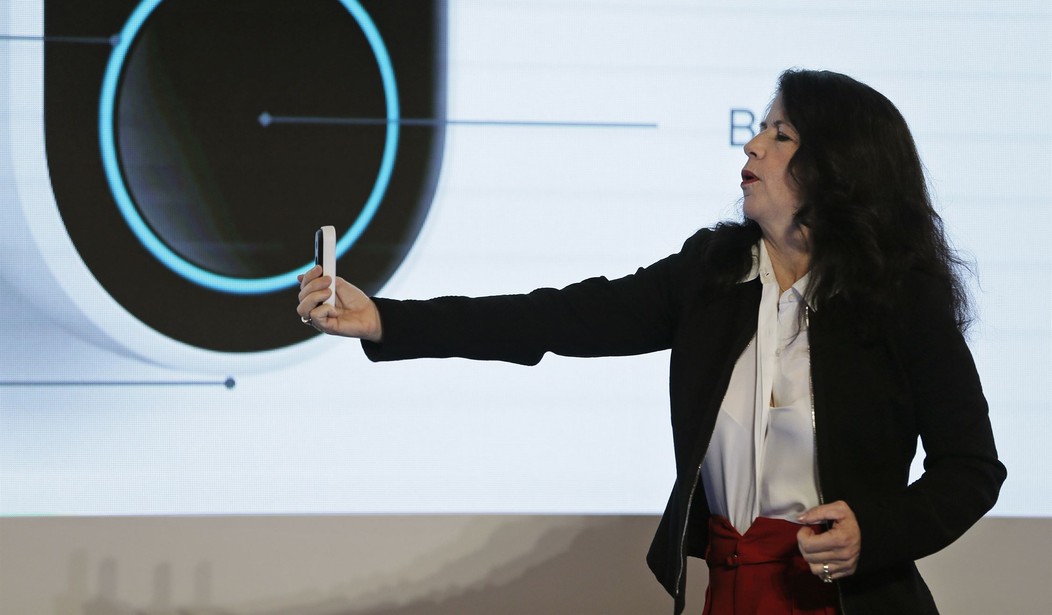

Google told Reuters this week it is developing an alternative to the industry standard method for classifying skin tones, which a growing chorus of technology researchers and dermatologists says is inadequate for assessing whether products are biased against people of color.

At issue is a six-color scale known as Fitzpatrick Skin Type (FST), which dermatologists have used since the 1970s. Tech companies now rely on it to categorize people and measure whether products such as facial recognition systems or smartwatch heart-rate sensors perform equally well across skin tones. read more

Critics say FST, which includes four categories for “white” skin and one apiece for “black” and “brown,” disregards diversity among people of color.

In the past, we’ve examined some of the glaring flaws in facial recognition software in the early years of its development. Amazon’s Rekognition software has been hilariously bad at its job in terms of racial benchmarks. The first release, when undergoing public testing, was able to identify white males correctly 100% of the time, with the success rate for Hispanic males still being over 90%. But it couldn’t pick out white females in a larger number of cases, misidentifying them as males 7% of the time. When asked to identify Black females, the success rate was well below half and in almost one-third of examples, it identified them as men.

This led to some results that were both amusing and disturbing. When the ACLU tested the software by scanning the images of all of California’s legislators and comparing them to a database of tens of thousands of mugshots, it identified more than two dozen of the (mostly non-white) elected officials as criminals. Of course, this is California we’re talking about, so maybe it wasn’t that far off the mark.

But how could emotionless software get the races so wrong? Some suspected that the inherent bias in the programmers had carried over to the product, but now it looks like it’s a bit more complicated than that. I was previously unaware of the Fitzpatrick Skin Type (FST) test, and that could wind up being the culprit. Given the wide spectrum of skin pigmentation among humans, how did they set up the FST to identify four different tones of “white” and only one tone each for “brown” and “Black?” If that’s what has been leading to the disparity in the results, perhaps it’s fixable, though it sounds like that is going to take a lot of work.

Assuming Google gets this working, will the software find its way into the whole racial justice and “evils of whiteness” debate? Good question. One of the main stumbling blocks during all of these debates, at least for me, is the crazy way that social justice advocates so blithely talk about “white people” and “people of color” as if it’s an either-or choice. How white do you have to be to be considered by default as part of the “problem with whiteness?” How Black or brown will you have to rate on the coming software scale to qualify as part of the “underserved communities?”

The first time I heard about the uproar in England and the racist commentary supposedly being flung around regarding Harry Windsor’s new bride, Meghan Markle, I was frankly taken aback. I’m not an expert on celebrities, but I’d seen plenty of pictures of her and had absolutely no idea she was Black. She certainly looked white to me and seems to have come from a pretty nicely “served” background. So does she count officially as being Black? Or would Google lump her in with the evils of whiteness category?

The more the left continues to divide the country along racial lines with the full cooperation of the Democratic Party and most of the media, the more bizarre these conversations are becoming. I sort of dread the idea of reaching the point where computer algorithms will be picking and choosing among the general population as to which side of the battle you must sign up for. And, yet again… I guess that whole dream about judging people by the content of their character is pretty much out the window.

Join the conversation as a VIP Member