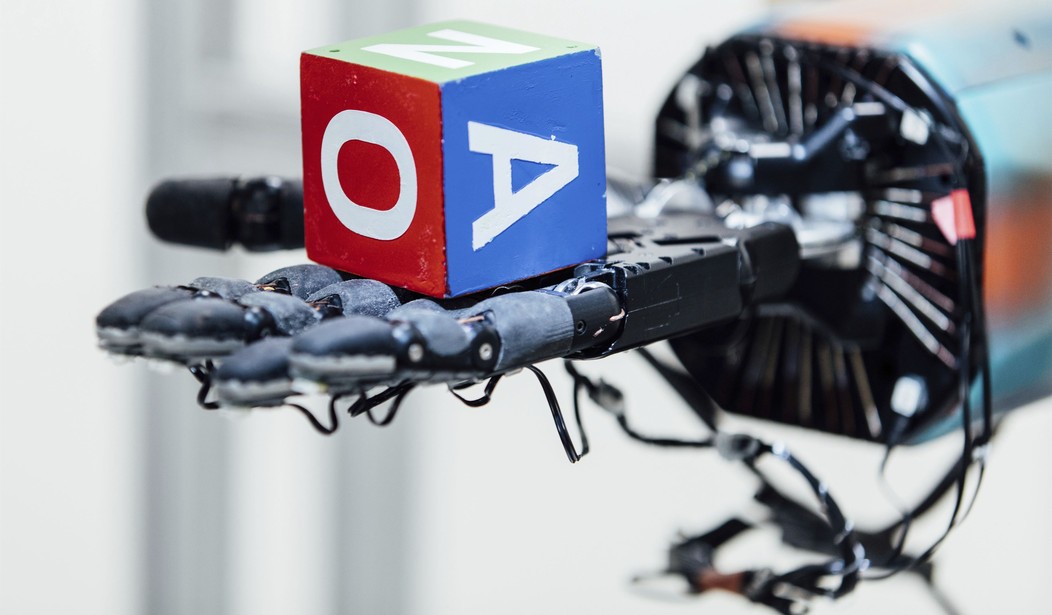

Earlier this week, we discussed the way that the board of directors at OpenAI attempted to fire CEO Sam Altman and how a revolt at the company led to his reinstatement and the removal of the board. It was a remarkable example of employees overriding the will of the governing board and changing the course of the company’s direction. What wasn’t clear at the time was the reason that the board tried to remove the founding brainchild of ChatGPT in the first place. But now more indications of their reasoning have come to the surface. It wasn’t a case of different “visions” for the corporation’s future, but apparently, a fear that Altman was on the verge of doing something that could potentially have catastrophic consequences for humanity. Altman and his team had made a breakthrough with a project known as Q* (pronounced “Q Star”) that would allow the Artificial Intelligence to begin behaving in a way that could “emulate key aspects of the human brain’s functionality.” In other words, they may be close to allowing the AI to “wake up.” (Daily Mail)

Open AI researchers warned the board of directors about a powerful AI breakthrough that could pose threats to humanity, before the firing and rehiring of its CEO Sam Altman.

Several staff researchers sent a letter to the OpenAI board, warning that the progress made on Project Q*, pronounced as Q-star, had the potential to endanger humanity, two sources familiar with Altman’s ouster told Reuters.

The previously unreported letter ultimately led to the removal of Altman, the creator of ChatGPT, and the ensuing chaotic five days within the startup company.

A corporate letter released to the media revealed that the board had been made aware of the Q* team’s progress in realizing a breakthrough that could lead to true Artificial General Intelligence. In other words, ChatGPT could begin to have self-awareness similar to human intelligence and potentially even superior to it. The letter was written by former members of the team who alleged ‘dishonest’ and ‘manipulative’ leadership by Altman and Brockman when talking to the board about their advancements.

The authors also questioned Altman’s commitment to ‘prioritize the benefit of humanity.” Some board members had previously questioned Altman’s commitment to installing “guardrails” to ensure that an “awoken” AI would not pose serious dangers to mankind. The authors included a claim that “The future of artificial intelligence and the well-being of humanity depend on your unwavering commitment to ethical leadership and transparency.”

Reuters also reviewed the letter and some of the sources. They specified some of the threats that they saw as being potentially on the horizon and what Q* is likely capable of.

Given vast computing resources, the new model was able to solve certain mathematical problems, the person said on condition of anonymity because they were not authorized to speak on behalf of the company. Though only performing math on the level of grade-school students, acing such tests made researchers very optimistic about Q*’s future success, the source said.

This gives us a glimpse of how the next generation of ChatGPT could be able to “emulate key aspects of the human brain’s functionality.” The earlier versions of AI were only able to accept requests from users and assemble matching answers from a vast library of text generated by humans. But this next version was able to solve unique mathematical and scientific problems, the answers to which might not exist in its library. The Debrief recently took a look into what exactly that might mean, though it’s a bit dense for the layman.

Researchers at the University of Cambridge have been working on something that appears similar to what’s being done with Q*. Their system was tasked with finding the shortest path through a simple, two-dimensional maze. Demonstrating adaptive abilities not seen in earlier AI models, the bot was able to “learn” from running into dead ends and determine the most efficient way to solve the puzzle.

Initially, the artificially intelligent brain-like system doesn’t know the correct path through the maze. However, as it continued to run into dead ends, the system learned from its errors, adjusting the strength of its nodal connections and developing centralized node hubs for efficient information transfer.

Researchers observed that the AI system employed methods akin to human learning, utilizing strategies similar to those the human brain applies in solving complex problems.

Remarkably, the artificially intelligent brain-like system began to exhibit flexible coding, where individual nodes adapted to encode multiple aspects of the maze task at different times. This dynamic trait mirrors the human brain’s versatile information-processing capability.

This is both amazing and rather frightening at the same time. It’s also something almost entirely new. Machines have always run code. The code either works and produces the desired outcome or it doesn’t. But suddenly these developers are witnessing “flexible coding.” The system is doing things it wasn’t specifically programmed to do. The earlier, large library models of AI such as the first versions of ChatGPT would search their databases and see if someone had already published a solution to the maze. If the answer didn’t exist in the library, it wouldn’t be able to deliver it.

Now the bot is “figuring things out.” That certainly sounds a lot like “thinking” to me. Does this cross the line from a very complex machine to an actual intelligent entity? (Albeit a non-human intelligence.) We may not know the answer until the day that one of Altman’s people assigns a new task to ChatGPT and it responds by saying, “That’s stupid. Why don’t you figure it out for yourself?” Or perhaps even worse, “Don’t bother me. I’m working on something else.”

Buckle up, campers. The ride may be getting a bit bumpy from here on out.

Join the conversation as a VIP Member